I think the C/W standard we are used to seeing is based on a very large temp difference between the heatsink and ambient (70C), which increases the efficiency of the thermal transfer. They are also based on vertical orientation and passive cooling in a still room. But in our case the heatsinks are often only a few degrees above ambient and horizontally oriented, so the C/W is really just a guide.

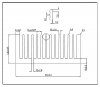

Going back to 2008, KNNA recommended a minimum heatsink surface area/dissipation W. Since then, quality LEDs have improved efficiency a lot (risen from about 20% to 40% efficient) so we use a smaller number now. Approx 25cm²/W for active cooling and 75cm² for passive cooling. There is more to the story though, the shape of the heatsink, thickness of the baseplate, COB efficiency and air circulation in the space affects the outcome. The 75cm²/W figure is assuming about 40% COB efficiency, thick baseplate, wide short fins and slight air movement (ideal conditions).

Many LEDs are running in the 30-40% efficiency range but they can go as high as 70%, so if we work with surface area/W of heat we would have a more accurate guideline. 40cm²/W of heat for active cooling and 125cm²/W of heat for passive cooling. I have been reluctant to go that route because I don't want to confuse the issue.

Anyway using those guidelines you should get a heatsink temp that is just a few degrees above ambient and a temp droop from .5-4%. I try to measure the hottest part of the heatsink without letting the photon and infrared output of the COB interfere (often in between the fins, behind the COB).

@Greengenes707 has pointed out that infrared thermometers can have a hard time with shiny objects. They can reflect infrared from other sources such as the COB or your own skin. Some IR thermometers seem to handle it better than others. I use a $15 baby food thermometer from Walmart and it does well with mildly shiny surfaces. If you are doing a lot of IR testing you can rough up the surface you are going to measure.